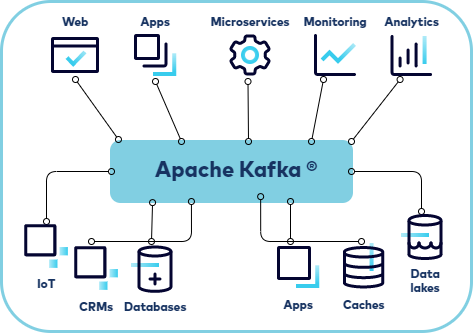

Kafka is the central nervous system of a microservices architecture, passing events from producers and consumers. Kafka brokers store those events in topics, which are similar to folders on a filesystem.

You can connect your Kafka cluster to external systems using connectors. Confluent offers many of these, including simple ones that ingest data into Kafka and export it from a topic.

Key Points Covered in The Article

- Kafka is a central nervous system of a microservices architecture, facilitating event passing between producers and consumers.

- Kafka brokers store events in topics, similar to folders on a filesystem.

- Kafka can be connected to external systems using connectors, including those offered by Confluent.

- Kafka is an event streaming platform that provides a distributed message queue for applications.

- Kubernetes is a container orchestration system ideal for managing microservices and also supports Kafka.

- Installing Kafka on Kubernetes involves creating a new namespace and verifying the creation of Kafka pods.

- Kafka is efficient for managing and analyzing large amounts of data, ensuring reliability and scalability.

- Kubernetes can recover failed Kafka pods by replacing them with workers on different nodes, but there may be higher I/O latency.

- Understanding how Kafka and Kubernetes work together is crucial for designing and troubleshooting Kafka on Kubernetes.

- Kafka on Kubernetes can be configured to handle network failures and ensure high availability using StatefulSets and replication.

- Monitoring systems can be set up to ensure the proper functioning of the Kafka cluster, and logging can aid in debugging.

- Kubernetes is a widely used container orchestration system that enables reliable deployment of Kafka on top of a public cloud.

- Node-based deployment of Kafka allows for resilience against infrastructure failures by distributing brokers across machines and availability zones.

- Using Kafka partitions and an appropriate replication strategy minimizes I/O and facilitates quick recovery from failed nodes.

Install Kafka on Kubernetes

Kafka is an event streaming platform that provides a distributed message queue for applications to publish and subscribe to streams of records.

Kafka is deployed as a cluster of brokers and uses a publish-subscribe model that allows consumers and producers to connect to the same topic.

This tool has numerous applications, including event-driven architectures, microservices, and IoT implementations.

Kubernetes, a container orchestration system created by Google and now managed by CNCF, is highly efficient in handling and scaling containerized workloads like microservices.

With its automated deployment, configuration, and scaling of containers and a platform for monitoring and managing these workloads, Kubernetes is an excellent solution for container management.

Furthermore, it also supports Kafka, making it an ideal choice for efficient and streamlined container management.

In this example, we will install two Kafka pods in a Kubernetes cluster. First, create a new namespace by typing ‘kubectl start namespace Kafka in a terminal window.

Then verify the Kafka pods have been created by typing ‘kubectl get pods. You should see a ZooKeeper pod and two Kafka pods.

In addition, since Kafka brokers are stateful, they need to be restarted after a reboot or relocation and can take much longer to recover from failure than a typical microservice.

Deploy Kafka on Kubernetes

Kafka is a distributed streaming platform that makes creating and consuming data streams easy for applications.

This solution is exceptionally efficient for corporate teams managing and evaluating massive amounts of data, particularly for instantaneous analytics and applications.

It also helps ensure the reliability of applications by storing messages in a highly durable and scalable way.

Kafka brokers store data in files organized by topic and partition. Kubernetes can automatically recover pods and containers that fall, and Kafka brokers can restart by replacing them with another worker on a different node.

However, a rebuilt broker over the network can have higher I / O latency than the original. It means that if one broker fails, the data stored on it is still accessible by other brokers in the cluster.

If you’re planning running Kafka on Kubernetes, it’s crucial to understand how Kafka and Kubernetes work together. It will make it easier to design your set and to troubleshoot problems if they occur.

This blog post will walk you through installing the Kafka operator on Kubernetes and deploying your first Kafka resource using Minikube.

It will then show you how to connect a client to the Kafka cluster and produce and consume test messages. Ultimately, you will have a fully functioning Kafka cluster on Kubernetes.

Configure Kafka on Kubernetes

Networks are unreliable – all kinds of things. In the real world, servers can go down; processes run out of memory and crash.

Kubernetes deals with these failures by letting you specify the desired state of your application resources and then continuously reconciling it with the actual state, ensuring that your applications can always access data.

For instance, you can deploy Kafka as a StatefulSet with a replica set size of three or more, guaranteeing that one broker will always have enough messages to process read and write requests from any client.

You can also configure Kafka to use replication and data mirroring, allowing you to spread your Kafka cluster across availability zones so that if any single availability zone or host fails, your Kafka cluster remains active and continues to serve applications with data.

In addition, you can set up a monitoring system to check that the Kafka cluster is working fine. Also, you can configure all containers to log stdout and stderr to make it easier to debug any issues.

Start Kafka on Kubernetes

Kubernetes is a container orchestration system large organizations use to deploy, scale, and manage their containerized applications.

Google initially developed it as an internal cluster system called Borg, and it has since been donated to the cloud native computing foundation (CNCF), now one of the world’s most widely used container management platforms.

Kubernetes provides features that enable reliable deployment of Kafka on top of a public cloud.

This article covers these features and explain how they can be used to ensure Kafka can run even when the underlying infrastructure fails.

The primary reason for using node-based deployment is to deploy Kafka brokers on different machines and in different availability zones.

If one device or one availability zone goes down, the rest of the Kafka cluster will remain active and serve applications with data. Similarly, using Kafka persistence storage on non-local volumes allows the group to recover from a failing broker by simply relocating the persistence volume to another node.

In addition, it’s vital to use Kafka partitions and a replication strategy that minimizes I/O. It will allow the cluster to quickly rebuild any lost brokers and prevent performance degradation from a failed node.

Conclusion

In conclusion, Kafka is a powerful event-streaming platform crucial in microservices architectures. It acts as the central nervous system, facilitating the exchange of events between producers and consumers. With its ability to handle event-driven architectures, microservices, and IoT implementations, Kafka is a versatile tool for managing and processing streams of records.

Deploying Kafka on Kubernetes offers significant benefits. Kubernetes provides efficient container orchestration, automated deployment, and scalability for microservices. It supports Kafka, making it ideal for managing containerized Kafka deployments. Kafka clusters can ensure reliability and fault tolerance by leveraging Kubernetes’ features, such as high availability, automatic recovery, and replication strategies.

In summary, the combination of Kafka and Kubernetes allows for seamlessly integrating event streaming capabilities into microservices architectures. This enables efficient data processing, scalability, and resilience, making it a preferred solution for building robust and scalable systems.

ALSO READ:

How to Use Ballysports.com Activate Code on Your Devices